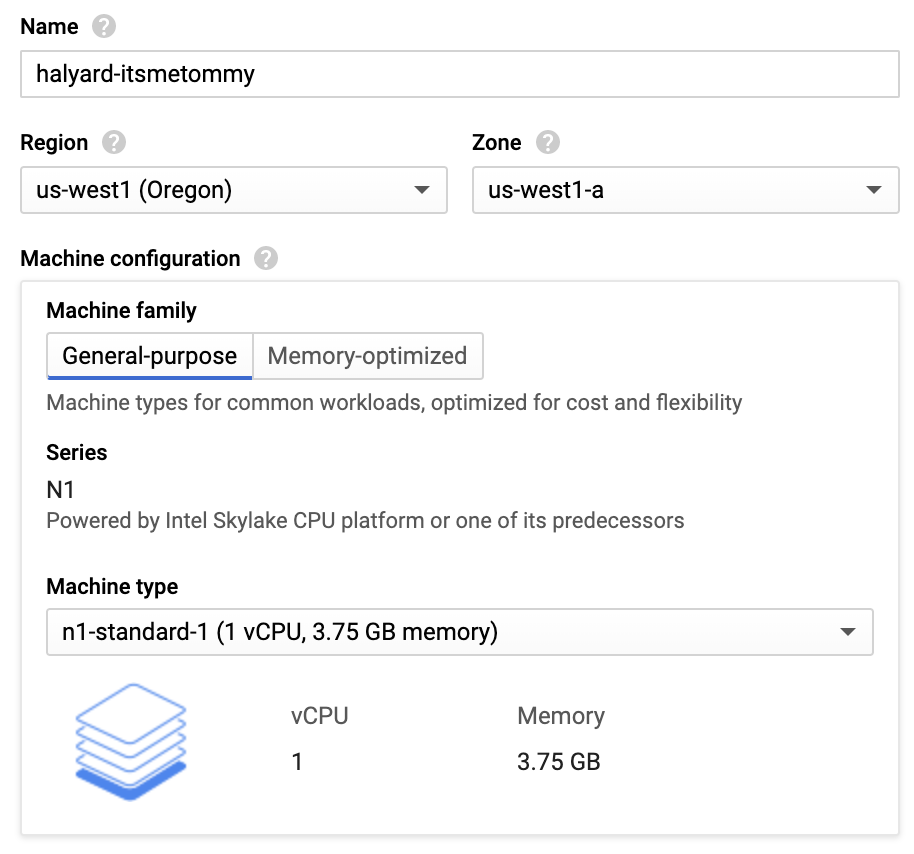

Create Halyard Instance w/ Persistent Disk

Click CREATE INSTANCE.

Important: Make sure to create your instance in the same network/zone as your Kubernetes cluster.

Input Name, Region, Zone and Machine type.

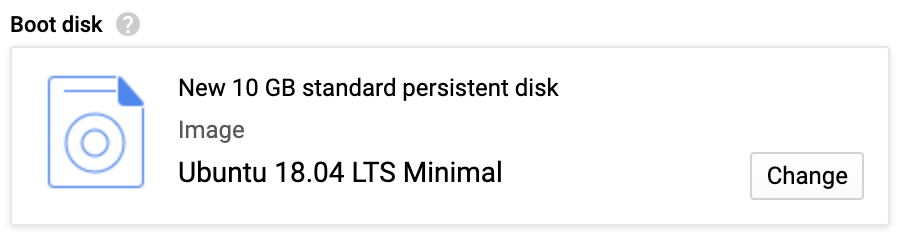

Select Ubuntu 18.04 LTS Minimal boot disk.

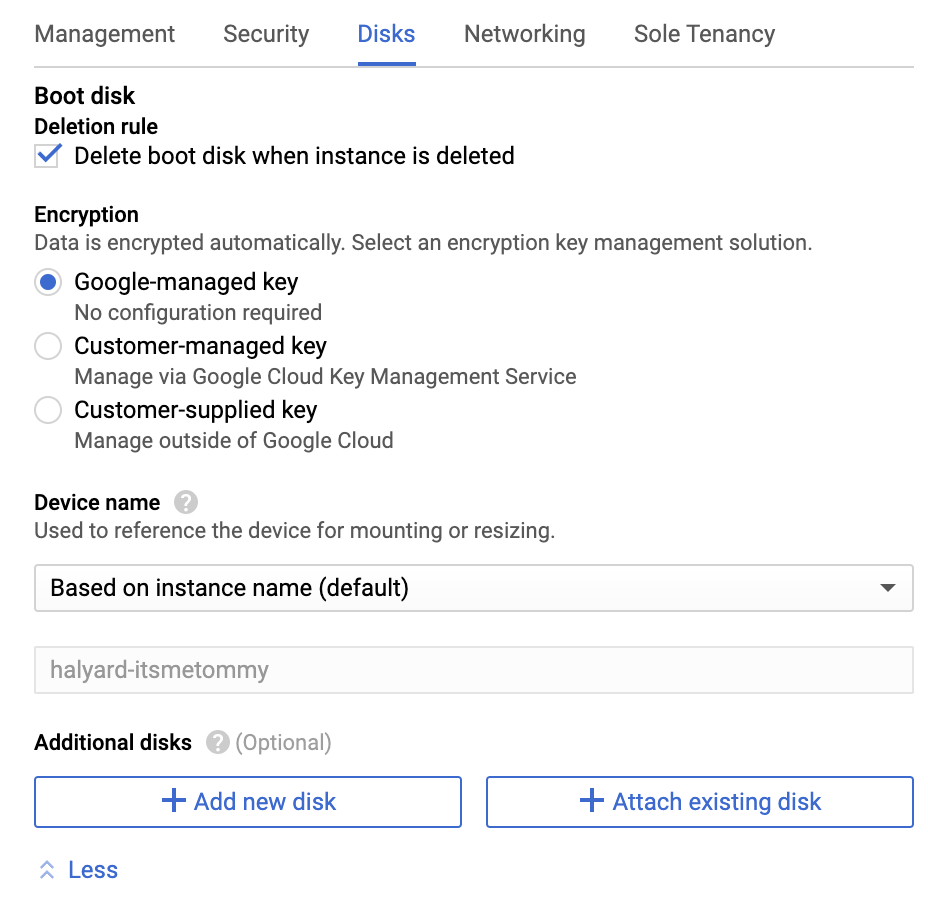

Click Management, security, disks, networking, sole tenancy.

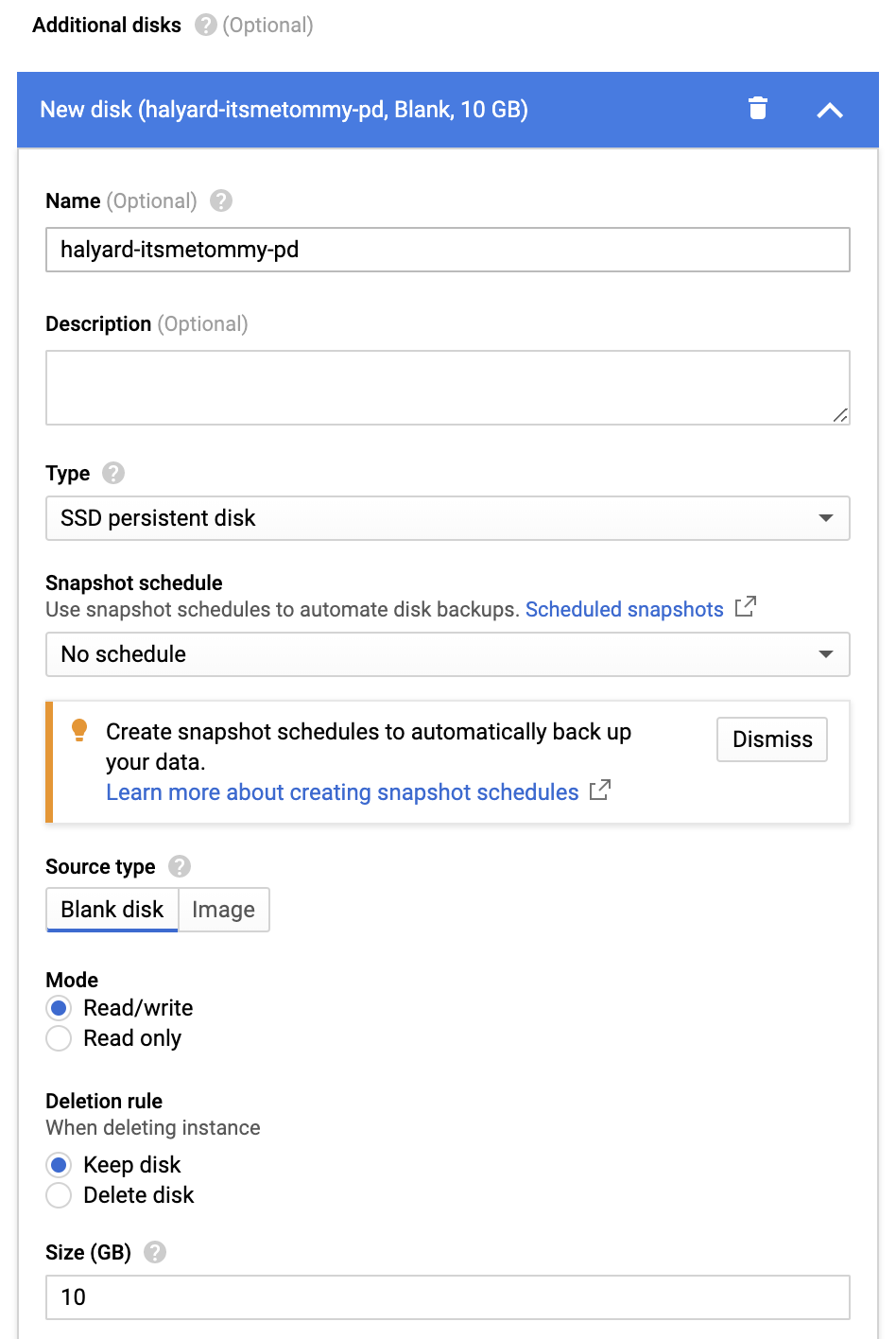

Select the Disks tab.

Under Additional disks, click the + Add new disk button.

Input your preferred Persistent Disk name and disk size (I selected the type SSD Persistent Disk and made it 10GB, but it’s up to you).

Click Create.

Note: You may need to add a Firewall rule depending on how you have your SSH access setup.

SSH into GCE instance.

# External access

HALYARD_VM=halyard-itsmetommy

gcloud compute ssh $HALYARD_VM

# Internal access

HALYARD_VM=halyard-itsmetommy

gcloud compute ssh $HALYARD_VM --internal-ipRan from halyard Instance

Once created, format and mount the disk.

View disks.

sudo lsblkExample

sudo lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

loop0 7:0 0 89.1M 1 loop /snap/core/7917

loop1 7:1 0 66.5M 1 loop /snap/google-cloud-sdk/103

sda 8:0 0 10G 0 disk

├─sda1 8:1 0 9.9G 0 part /

├─sda14 8:14 0 4M 0 part

└─sda15 8:15 0 106M 0 part /boot/efi

sdb 8:16 0 10G 0 diskFormat disk.

sudo mkfs.ext4 -m 0 -F -E lazy_itable_init=0,lazy_journal_init=0,discard /dev/[DEVICE_ID]Example

sudo mkfs.ext4 -m 0 -F -E lazy_itable_init=0,lazy_journal_init=0,discard /dev/sdbCreate mounting directory.

sudo mkdir -p /mnt/disks/[MNT_DIR]Example

sudo mkdir -p /mnt/disks/halyardMount disk.

sudo mount -o discard,defaults /dev/[DEVICE_ID] /mnt/disks/[MNT_DIR]Example

sudo mount -o discard,defaults /dev/sdb /mnt/disks/halyardConfigure read and write permissions.

sudo chmod a+w /mnt/disks/[MNT_DIR]Example

sudo chmod a+w /mnt/disks/halyardCreate a backup of your current /etc/fstab file.

sudo cp /etc/fstab /etc/fstab.backupUse the blkid command to find the UUID for the zonal persistent disk.

sudo blkid /dev/[DEVICE_ID]Example

sudo blkid /dev/sdb

/dev/sdb: UUID="8f0e3174-6c5a-4208-a32b-44652ca8711f" TYPE="ext4"Update /etc/fstab.

echo UUID=`sudo blkid -s UUID -o value /dev/[DEVICE_ID]` /mnt/disks/[MNT_DIR] ext4 discard,defaults,nofail 0 2 | sudo tee -a /etc/fstabExample

echo UUID=`sudo blkid -s UUID -o value /dev/sdb` /mnt/disks/halyard ext4 discard,defaults,nofail 0 2 | sudo tee -a /etc/fstabSetup Halyard Instance

Ran from halyard Instance

Run update.

sudo apt-get updateCreate working directory files.

{

WORKING_DIRECTORY=/mnt/disks/halyard

mkdir ${WORKING_DIRECTORY}/.hal

mkdir ${WORKING_DIRECTORY}/.secret

mkdir ${WORKING_DIRECTORY}/resources

}Install docker.

sudo apt install docker.ioCreate a kubeconfig file for Halyard & Spinnaker

- https://www.spinnaker.io/setup/install/providers/kubernetes-v2/#optional-create-a-kubernetes-service-account

- https://docs.armory.io/spinnaker-install-admin-guides/manual-service-account/

Create the spinnaker namespace, ServiceAccount and ClusterRoleBinding

Ran from localhost

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Namespace

metadata:

name: spinnaker

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: spinnaker-service-account

namespace: spinnaker

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: spinnaker-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: spinnaker-service-account

namespace: spinnaker

EOFCreate a kubeconfig file from the Kubernetes Service Account

Ran from localhost

SERVICE_ACCOUNT_NAME=spinnaker-service-account

CONTEXT=$(kubectl config current-context)

SA_NAMESPACE=spinnaker

PROJECT=$(gcloud info --format='value(config.project)')

KUBECONFIG_FILE=kubeconfig-spinnaker-itsmetommy-system-sa

NEW_CONTEXT=${SA_NAMESPACE}-sa

SECRET_NAME=$(kubectl get serviceaccount ${SERVICE_ACCOUNT_NAME} \

--context ${CONTEXT} \

--namespace ${SA_NAMESPACE} \

-o jsonpath='{.secrets[0].name}')

TOKEN_DATA=$(kubectl get secret ${SECRET_NAME} \

--context ${CONTEXT} \

--namespace ${SA_NAMESPACE} \

-o jsonpath='{.data.token}')

case "$(uname -s)" in

Darwin*) TOKEN=$(echo ${TOKEN_DATA} | base64 -D);;

Linux*) TOKEN=$(echo ${TOKEN_DATA} | base64 -d);;

*) TOKEN=$(echo ${TOKEN_DATA} | base64 -d);;

esac

kubectl config view --raw > ${KUBECONFIG_FILE}.full.tmp

# Switch working context to correct context

kubectl --kubeconfig ${KUBECONFIG_FILE}.full.tmp config use-context ${CONTEXT}

# Minify

kubectl --kubeconfig ${KUBECONFIG_FILE}.full.tmp \

config view --flatten --minify > ${KUBECONFIG_FILE}.tmp

# Rename context

kubectl config --kubeconfig ${KUBECONFIG_FILE}.tmp \

rename-context ${CONTEXT} ${NEW_CONTEXT}

# Create token user

kubectl config --kubeconfig ${KUBECONFIG_FILE}.tmp \

set-credentials ${CONTEXT}-${SA_NAMESPACE}-token-user \

--token ${TOKEN}

# Set context to use token user

kubectl config --kubeconfig ${KUBECONFIG_FILE}.tmp \

set-context ${NEW_CONTEXT} --user ${CONTEXT}-${SA_NAMESPACE}-token-user

# Set context to correct namespace

kubectl config --kubeconfig ${KUBECONFIG_FILE}.tmp \

set-context ${NEW_CONTEXT} --namespace ${SA_NAMESPACE}

# Flatten/minify kubeconfig

kubectl config --kubeconfig ${KUBECONFIG_FILE}.tmp \

view --flatten --minify > ${KUBECONFIG_FILE}

# Remove tmp

rm ${KUBECONFIG_FILE}.full.tmp

rm ${KUBECONFIG_FILE}.tmpCopy the kubeconfig file to the Halyard GCE Instance.

gcloud compute scp $KUBECONFIG_FILE $HALYARD_VM:/mnt/disks/halyard/.secretCreate IAM Service Account w/ GCS Access

Ran from localhost

Create an IAM Service Account with GCS (Google Cloud Storage) access for Spinnaker to use.

{

SERVICE_ACCOUNT_NAME=spinnaker-itsmetommy-sa

SERVICE_ACCOUNT_FILE=spinnaker-itsmetommy-gcs-sa.json

SERVICE_ACCOUNT_DISPLAY_NAME="Spinnaker Account"

PROJECT="$(gcloud info --format='value(config.project)')"

# Create Service Account

gcloud iam service-accounts create \

$SERVICE_ACCOUNT_NAME \

--project $PROJECT \

--display-name $SERVICE_ACCOUNT_DISPLAY_NAME

sleep 10

# List Service Account Email

SA_EMAIL=$(gcloud iam service-accounts list \

--project=$PROJECT \

--filter="email ~ $SERVICE_ACCOUNT_NAME" \

--format='value(email)')

# Associate Role

gcloud projects add-iam-policy-binding \

$PROJECT \

--role roles/storage.admin \

--member serviceAccount:$SA_EMAIL

# Download Service Account key

gcloud iam service-accounts keys create \

$SERVICE_ACCOUNT_FILE \

--project $PROJECT \

--iam-account $SA_EMAIL

}Copy the GCS Service Account key to the Halyard Instance.

gcloud compute scp $SERVICE_ACCOUNT_FILE $HALYARD_VM:/mnt/disks/halyard/.secretSet Ubuntu Permissions

Ran from halyard GCE Instance

sudo chown -R ubuntu.ubuntu /mnt/disks/halyardWhy do we need to change the owner?

If you look at the UID/GID of the user ubuntu, you’ll see that it is set to 1000:1000. This is also the same UID/GID as the spinnaker user within the docker container that we will be using for halyard.

# From GCE halyard instance

grep ubuntu /etc/passwd

ubuntu:x:1000:1000:Ubuntu:/home/ubuntu:/bin/bash

# From halyard docker container

id

uid=1000(spinnaker) gid=1000(spinnaker) groups=1000(spinnaker)Create Storage Bucket

Ran from localhost

GCP_PROJECT=$(gcloud info --format='value(config.project)')

BUCKET=spinnaker-itsmetommy-${GCP_PROJECT}

gsutil mb gs://$BUCKETStart Halyard container

Ran from halyard GCE Instance

{

WORKING_DIRECTORY=/mnt/disks/halyard

sudo docker run --name halyard -d \

-v ${WORKING_DIRECTORY}/.hal:/home/spinnaker/.hal \

-v ${WORKING_DIRECTORY}/.secret:/home/spinnaker/.secret \

-v ${WORKING_DIRECTORY}/resources:/home/spinnaker/resources \

gcr.io/spinnaker-marketplace/halyard:stable

}Verify.

$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d6123302a8f2 gcr.io/spinnaker-marketplace/halyard:stable "/bin/sh -c \"/opt/ha…" 13 seconds ago Up 7 seconds halyardEnter the Halyard container

Ran from halyard GCE Instance

sudo docker exec -it halyard bashRan from halyard docker container

Create a friendlier environment.

export PS1="\h:\w \u\$ "

alias ll='ls -alh'

cd ~Setup Storage Bucket

Ran from halyard container

Note: Make sure to update the project ID field with your project ID (GCP_PROJECT=xxxxx).

GCP_PROJECT=xxxxx

BUCKET_LOCATION=us

BUCKET=spinnaker-itsmetommy-${GCP_PROJECT}

SERVICE_ACCOUNT_FILE=/home/spinnaker/.secret/spinnaker-itsmetommy-gcs-sa.json

hal config storage gcs edit \

--project $GCP_PROJECT \

--bucket-location $BUCKET_LOCATION \

--bucket $BUCKET \

--json-path $SERVICE_ACCOUNT_FILE \

\

&& hal config storage edit --type gcsAdd the kubeconfig and cloud provider to Spinnaker (via Halyard)

Ran from halyard docker container

Configure the kubeconfig and account.

SA_NAMESPACE=spinnaker

ACCOUNT_NAME=spinnaker

KUBECONFIG_FILE=/home/spinnaker/.secret/kubeconfig-spinnaker-itsmetommy-system-sa

# Enable the Kubernetes cloud provider

hal config provider kubernetes enable

# Add the account

hal config provider kubernetes account add $ACCOUNT_NAME \

--provider-version v2 \

--kubeconfig-file $KUBECONFIG_FILE \

--only-spinnaker-managed true \

--namespaces $SA_NAMESPACEEnable Artifacts

Ran from halyard docker container

Within Spinnaker, ‘artifacts’ are consumable references to items that live outside of Spinnaker (for example, a file in a git repository or a file in an S3 bucket are two examples of artifacts). This feature must be explicitly turned on.

Armory

hal config features edit --artifacts true

hal config artifact http enable # OptionalDeploy distributed

Ran from halyard docker container

ACCOUNT_NAME=spinnaker

hal config deploy edit --type distributed --account-name $ACCOUNT_NAMEChoose Spinnaker Version

Ran from halyard docker container

Specify a version, for example 1.17.0.

# Specify version

hal version list

export VERSION=1.17.0

hal config version edit --version $VERSIONInstall Spinnaker

Ran from halyard docker container

The complete spinnaker installation will take ~10 minutes.

hal deploy applyRan from localhost

Feel free to watch your pods be created within the spinnaker namespace.

Note: Install watch — brew install watch

watch kubectl get pods -n spinnakerExample

Every 2.0s: kubectl get pods -n spinnaker

NAME READY STATUS RESTARTS AGE

spin-clouddriver-7c7df4767d-rr5b7 1/1 Running 0 9m52s

spin-deck-7df9f8984f-h5rtk 1/1 Running 0 9m55s

spin-echo-cf7976d6b-njfm2 1/1 Running 0 9m54s

spin-front50-554dbcf96f-86mdj 1/1 Running 0 9m53s

spin-gate-78cc89fb4f-g2mv2 1/1 Running 0 9m57s

spin-orca-6969c7d4-6dnfz 1/1 Running 0 9m53s

spin-redis-56fd8c6bb6-49sk5 1/1 Running 0 9m57s

spin-rosco-676b7fdd55-xxb9n 1/1 Running 0 9m51sConnect to Spinnaker

Ran from localhost

NAMESPACE=spinnaker

DECK_POD=$(kubectl -n ${NAMESPACE} get pod -l cluster=spin-deck -ojsonpath='{.items[0].metadata.name}')

GATE_POD=$(kubectl -n ${NAMESPACE} get pod -l cluster=spin-gate -ojsonpath='{.items[0].metadata.name}')

kubectl -n ${NAMESPACE} port-forward ${DECK_POD} 9000 &

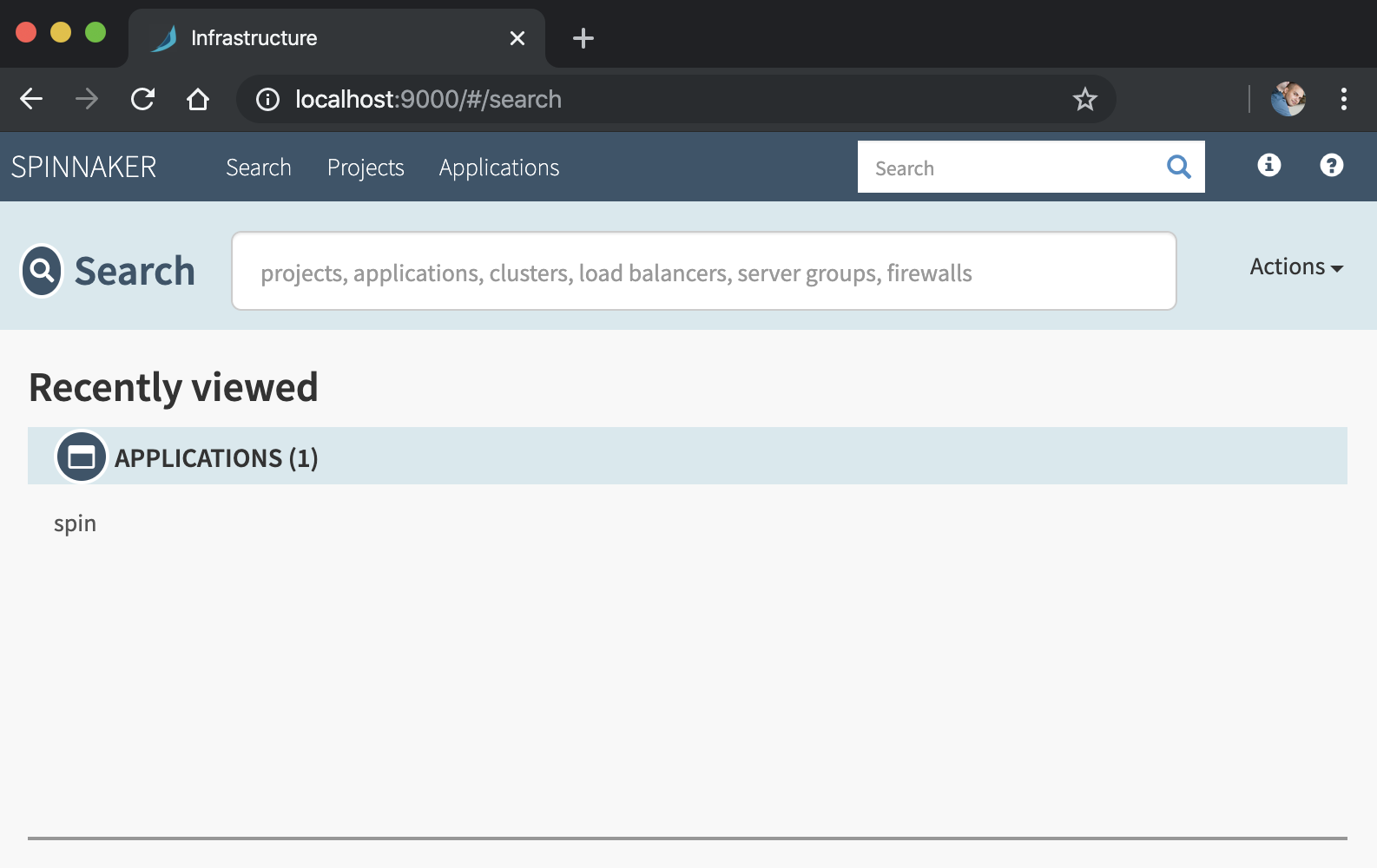

kubectl -n ${NAMESPACE} port-forward ${GATE_POD} 8084 &Open browser.

open http://localhost:9000

Clean Up

Ran from localhost

{

kubectl delete ns spinnaker

kubectl delete clusterroleBinding spinnaker-admin

gsutil rm -r gs://[BUCKET]

gcloud iam service-accounts delete [SA-NAME]@[PROJECT-ID].iam.gserviceaccount.com

gcloud compute instances delete [GCE_INSTANCE]

gcloud compute disks delete [PERSISTENT_DISK] --zone=[ZONE]

}Example

{

kubectl delete ns spinnaker

kubectl delete clusterroleBinding spinnaker-admin

gsutil rm -r gs://spinnaker-itsmetommy-xxxxxx

gcloud iam service-accounts delete spinnaker-itsmetommy-sa@xxxxxx.iam.gserviceaccount.com

gcloud compute instances delete halyard-itsmetommy --zone=us-west1-a

gcloud compute disks delete halyard-itsmetommy-pd --zone=us-west1-a

}Errors

Error 1

hal config provider kubernetes enable

+ Get current deployment

Success

- Edit the kubernetes provider

Failure

Problems in Global:

! ERROR Failure writing your halconfig to path

"/home/spinnaker/.hal/config": /home/spinnaker/.hal/config

- Failed to enable kubernetesFix

Fix permissions by making sure that /mnt/disks/halyard is owned by ubuntu.ubuntu.

# Ran on GCE instance

sudo chown -R ubuntu.ubuntu /mnt/disks/halyardError 2

hal config storage gcs edit \

> --project $GCP_PROJECT \

> --bucket-location $BUCKET_LOCATION \

> --bucket $BUCKET \

> --json-path $SERVICE_ACCOUNT_DEST

+ Get current deployment

Success

+ Get persistent store

Success

- Edit persistent store

Failure

Problems in default.persistentStorage:

- WARNING Your deployment will most likely fail until you configure

and enable a persistent store.

Problems in default.persistentStorage.gcs:

! ERROR Failed to ensure the required bucket

"spinnaker-itsmetommy-xxxxxx" exists: java.io.FileNotFoundException:

/home/spinnaker/.secret/spinnaker-itsmetommy-gcs-sa.json (Permission denied)

- Failed to edit persistent store "gcs".Fix

Check the owner permissions of /home/spinnaker/.secret/spinnaker-itsmetommy-gcs-sa.json.

# Ran from the GCE instance

sudo chown ubuntu.ubuntu /home/spinnaker/.secret/spinnaker-itsmetommy-gcs-sa.jsonError 3

Run hal deploy connect to connect to Spinnaker.

/home/spinnaker/.hal/default/install.sh must be executed with root permissions; exiting

! ERROR Error encountered running script. See above output for more

details. Fix

hal config deploy edit --type distributed --account-name $ACCOUNT_NAMEError 4

{

> WORKING_DIRECTORY=/mnt/disks/halyard

>

> docker run --name halyard -it --rm \

> -v ${WORKING_DIRECTORY}/.hal:/home/spinnaker/.hal \

> -v ${WORKING_DIRECTORY}/.secret:/home/spinnaker/.secret \

> -v ${WORKING_DIRECTORY}/resources:/home/spinnaker/resources \

> gcr.io/spinnaker-marketplace/halyard:stable

> }

docker: Got permission denied while trying to connect to the Docker daemon socket at unix:///var/run/docker.sock: Post http://%2Fvar%2Frun%2Fdocker.sock/v1.39/containers/create?name=halyard: dial unix /var/run/docker.sock: connect: permission denied.

See 'docker run --help'.Add your yourself to the docker group or run sudo docker run.

# Ran from the GCE instance

sudo usermod -aG docker $USEROR (I used this option)

{

WORKING_DIRECTORY=/mnt/disks/halyard

sudo docker run --name halyard -it --rm \

-v ${WORKING_DIRECTORY}/.hal:/home/spinnaker/.hal \

-v ${WORKING_DIRECTORY}/.secret:/home/spinnaker/.secret \

-v ${WORKING_DIRECTORY}/resources:/home/spinnaker/resources \

gcr.io/spinnaker-marketplace/halyard:stable

}Error 5

hal config storage gcs edit \

> --project $GCP_PROJECT \

> --bucket-location $BUCKET_LOCATION \

> --bucket $BUCKET \

> --json-path $SERVICE_ACCOUNT_DEST \

> \

> && hal config storage edit --type gcs

+ Get current deployment

Success

+ Get persistent store

Success

- Edit persistent store

Failure

Problems in default.persistentStorage:

- WARNING Your deployment will most likely fail until you configure

and enable a persistent store.

Problems in default.persistentStorage.gcs:

! ERROR Failed to ensure the required bucket

"spinnaker-itsmetommy-xxxxx" exists:

com.google.api.client.googleapis.json.GoogleJsonResponseException: 403 Forbidden

{

"code" : 403,

"errors" : [ {

"domain" : "global",

"message" : "spinnaker-itsmetommy-sa@xxxxx.iam.gserviceaccount.com

does not have storage.buckets.get access to

spinnaker-itsmetommy-xxxxx.",

"reason" : "forbidden"

} ],

"message" : "spinnaker-itsmetommy-sa@xxxxx.iam.gserviceaccount.com

does not have storage.buckets.get access to

spinnaker-itsmetommy-xxxxx."

}

- Failed to edit persistent store "gcs".Fix

This is a problem on Google’s end, where if you create a Service Account, delete it, then re-create it, Google somehow has some sort of cache that denies access. I ended up testing this theory by creating a new Service Account with a never before used name and everything worked.